One of my first articles (Computers in the cloud…and it’s raining!), sparked a reader’s question: how green is the cloud? This got me thinking. A few weeks ago, I read about the impact of simply saying “Thank You” to Gen AI. Turns out, it wastes a huge amount of computational resources and consumes a lot of power. This pushed me to dig deeper.

So, I reached out to an ex-colleague, Joel Gibb. He heads the NZ operations at CDC, a key Data Centre provider across Australia and NZ. And guess what? He kindly offered me a tour of their facilities!

My visit to CDC was a stark contrast to my first-ever data center tour back in 2000 in Singapore. I didn’t bring a jacket, and I was freezing! Modern data centers don’t need super-cooled air; they don’t feel like a chiller anymore.

The Power Thirst of Data Centers

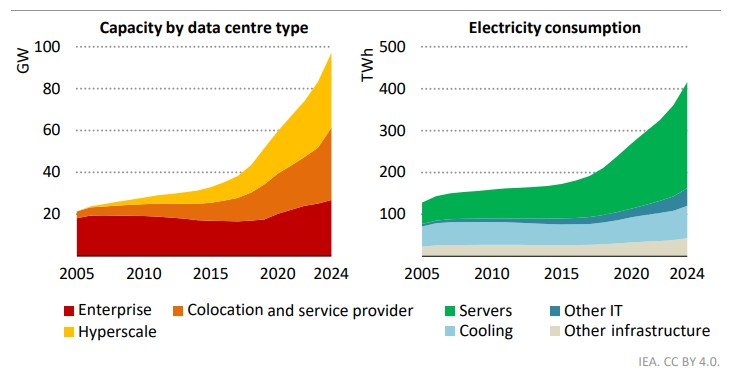

Let’s talk numbers. Data centers gulped down about 460 TWh (terawatt-hours) of electricity in 2024. This is roughly equivalent to 90 to 95 GW (gigawatts) of data center capacity, according to the IEA (International Energy Agency) Global Energy Review 2025.

There’s been a sharp increase in consumption since 2017. Why? Cloud computing, social media, and in recent years, AI are the big drivers. To give you some perspective, a 50 MW (megawatts) data center consumes as much electricity annually as 50,000 households. And get this: the largest data center being planned is a whopping 5 GW capacity in the UAE!

What’s really interesting is that about 85% of global data center electricity consumption comes from the US, EU, and China. For comparison, the total installed capacity of CDC data centers in NZ (as per their website) is 224 MW. By 2030, projections show total electricity consumed by data centers will double to around 900 TWh. And that’s assuming efficiency improvements keep pace!

The Green Energy Mix

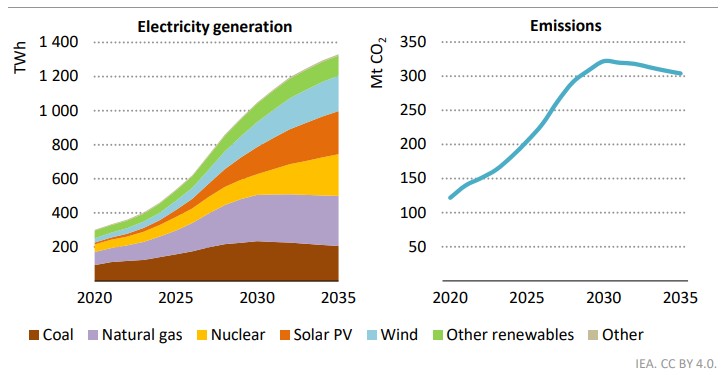

Globally, just under half (44%) of the energy used for data centers is from renewable sources. The rest is a mix of coal (30%) and natural gas (26%). But this is set to change! By 2035, more than half will be renewable. This shift will cut CO2 emissions from a peak of 3% to 1% of total global emissions. Good news!

But there are other factors in the “green power” equation for a data center. Think backup power generators and UPS batteries. Most data centers have both a diesel-powered backup generator and a battery bank for the UPS (Uninterruptible Power Supply). Why both? The UPS kicks in instantly when power fails, keeping systems running for 5-7 minutes. This gives diesel generators enough time to come online and take over until the main supply is restored.

Efficiency: More Than Just Watts

Another fascinating aspect is Power Usage Effectiveness (PUE). It’s a simple ratio: total input power to consumed power for IT workloads. An impossible PUE is 1, where all input power is used solely for IT workloads. But what about lights, building management, and, most importantly, cooling? Cooling can eat up between 10% to 30% of the load.

A PUE of 1.5 (meaning 67% IT load) or below is considered good. A PUE of 1.25 (80% IT load) would be outstanding! And get this: Google reported its trailing twelve-month PUE across all its data centers as an incredible 1.1! They achieve this by optimizing their data centers using machine learning and artificial neural networks. They even design their own highly efficient servers.

Cooling Down with Liquid

Back to my CDC tour. Why wasn’t I feeling like I was in a chiller? They use liquid cooling to keep systems cool, not just a blast of cold air. This also leads to a better PUE, as liquid cooling is far more efficient.

However, as they say, one solution often leads to another problem. Water usage in data centers is becoming a new issue. A large amount of water can be lost to evaporation. Similar to PUE, this is monitored using Water Usage Effectiveness (WUE). Thankfully, closed-loop liquid cooling systems drastically reduce water usage and even allow for heat reuse.

Remember Microsoft’s Project Natick? That experimental undersea data center initially felt like science fiction. While Microsoft has stopped their experiment with no further announced plans, China has actually deployed its first commercial undersea data center. The key benefit? Cooling! Though I’m not sure about any potential damage to marine ecology…

AI’s Role in Powering Down

AI workloads are different from standard IT workloads. This means data centers will need to rely on efficient hardware and effective cooling options. Newer AI models will undoubtedly add to the energy footprint and push energy demands. Most AI hardware manufacturers now use direct liquid cooling for their components. I was amazed when I first heard about this technology! This is exactly what NIWA uses in their latest supercomputer, Cascade, which is hosted at CDC.

Ironically, AI itself can help manage power and cooling demand in real-time. We’ll also see more and more data centers rejecting colocation customers if their hardware isn’t energy efficient.

There’s another interesting development in the US: Microsoft and Google are partnering with nuclear power generation companies. This partnership aims for exclusive use in data centers, potentially giving them long-term stability in pricing and availability.

New Zealand’s Green Edge

New Zealand has some unique advantages when it comes to sustainable data centers:

- Renewable Energy: Approximately 80% to 85% of our electricity comes from renewable resources. Sweet!

- Subtropical Climate: Our climate is helpful for maintaining optimal data center temperatures of 16 to 27°C.

Newer data centers are much “greener” than their older counterparts, thanks to technological advancements. Though, I admit, I’m sometimes skeptical and question the “green-washing” elements. Sometimes these are just marketing tactics by cloud companies. Purchasers of cloud and colocation services should be clear about their objectives and specify the measures they want to see. And don’t forget supply-chain and building sustainability!